As long as AI releases its new “magical powers” to the market, tensions will rise in the tech community and further society as a whole. What kind of risk will humanity be subject to? Does it seem like a science fiction movie to you? It is not! This is a real concern and people from many fields are talking about this right now, while you are reading this article.

Many scientists and tech professionals are worried about what we can expect in the next few years with machine learning getting more and more intelligent. As an example of this, in March, an open letter co-signed by many AI representatives was published asking for a 6-month pause in AI developments.

In parallel with this (and kind of controversial), some of these same companies are firing ethical AI professionals. It seems that ethical conflicts are setting the giants ablaze right?

Or, in some cases, renowned AI professionals are quitting their jobs. That’s the case of AI pioneer Geoffrey Hinton who left Google last week. Although he made a point of not linking his resignation to the company’s ethics problems, the fact reinforced concerns around AI development and led people to question the lack of transparency while big tech companies make frightening progress in their research and discoveries, confronting each other in a disproportionate dispute.

Who is Geoffrey Hinton, the “Godfather of AI”?

Geoffrey Hinton is a 75-year-old cognitive psychologist and computer scientist known for his groundbreaking work in deep learning and neural network research.

In 2012 Hinton helped build a machine-learning program that could identify objects, which opened the doors to modern AI-image generators, for example, and then for LLMs such as Chat-GPT and Google Bard. He works with his two students at the University of Toronto. One of them is Ilya Sutskever, the co-founder and chief scientist of OpenAi, responsible for Chat-GPT.

With an intense academic background at major universities and awards such as the 2018 Turing Award, Geoffrey Hinton quit his job last week at Google, where he dedicated 10 years towards AI development. Hinton now wants to focus on a safety and ethical AI.

Hinton’s Departure and the Warnings

According to an interview with The New York Times, the scientist left Google so that he could have the freedom to talk about the risks of artificial intelligence. To clarify his motivations, he wrote on his Twitter account “In the NYT today, Cade Metz implies that I left Google so that I could criticize Google. Actually, I left so that I could talk about the dangers of AI without considering how this impacts Google. Google has acted very responsibly.”

After Hinton quit Google, he raised factors around overreliance on AI, privacy concerns, and ethical considerations. Let’s go dive into the central points of his warnings:

Machines smarter than us: is that possible?

According to Geoffrey Hinton, machines getting more intelligent than humans is a matter of time. In a BBC interview, he says “Right now, they’re not more intelligent than us, as far as I can tell. But I think they soon may be.” referring to AI chatbots, mentioning its dangerousness as “quite scary”. He explained that in artificial intelligence, neural networks are systems similar to the human brain in the way they learn and process information. Which makes AIs learn from experience, just like we do. This is deep learning.

Comparing the digital systems with our biological systems, he highlighted “…the big difference is that with digital systems, you have many copies of the same set of weights, the same model of the world.”.

And complimented “And all these copies can learn separately but share their knowledge instantly. So it’s as if you had 10,000 people and whenever one person learned something, everybody automatically knew it. And that’s how these chatbots can know so much more than any one person.”

AI in the “bad actors” hands

Still, for the BBC, Hinton mentioned the real dangers of having AI chatbots in the wrong hands, explaining the expression “bad actors” he had used before talking to The New York Times. He believes that powerful intelligence could be a devastator if it is in the wrong hands, referring to large governments such as Russia.

The importance of responsible AI development

Is important to say that in contrast to the many Open Letter signatories mentioned at the beginning of this article, Hinton does not believe we should stop AI progress and that the government has to take over the policy development to ensure that AI keeps evolving safely.“Even if everybody in the US stopped developing it, China would just get a big lead,” said Hinton to the BBC. He further mentioned it would be difficult to be sure if everybody really stopped their research, because of the international competition.

I have to say that I have already written some AI articles here, and this could be the hardest one thus far. It’s just not that simple to balance risks and benefits.

When I think about the many victories and advances the world is achieving through AI, it’s impossible not to wonder how society could grow and gain strong advantages if we have responsible AI development.

It can help human development in so many fields, such as health research and discoveries that are already achieving some great advancements with AI resources.

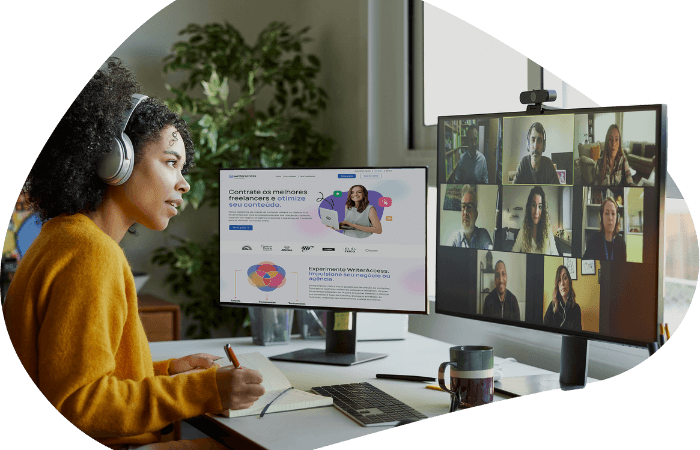

Here at Rock, as content marketers, we believe that AI efficiency could work in harmony with human creativity. We use AI-writering tools daily. Of course, in a responsible way. Avoiding misinformation or plagiarism, and prioritizing originality.

The World certainly could benefit greatly if we have these technological arsenals working towards the greater good, together, with people and their human skills which are unable to be copied by machines. Our emotional and creative minds are still accessible, unique and exclusive by nature.

That’s why I believe it is possible to consider human-AI relations as long as we have well-defined policies and regulations to ensure a safe and prosperous future for humanity.

Do you want to continue to be updated with Marketing best practices? I strongly suggest that you subscribe to The Beat, Rock Content’s interactive newsletter. There, you’ll find all the trends that matter in the Digital Marketing landscape. See you there!

![[Rock NA] State of Marketing Reports 2024 – Comkt Hubspot State of Marketing Report 2024](https://rockcontent.com/wp-content/uploads/2022/07/Banner-Fino-Rock-Convert-2500-%C3%97-500-px-19.png)