In Digital Marketing, the connection between the inherent aspects of the market with the use of technology and web development has emerged more than ever lately. Today, JavaScript and SEO are parts of the same conversation topic.

For this reason, in this article, we will cover everything from basic concepts to how to improve your positioning through the best practices of this programming language on websites and blogs.

First of all, it is important to understand that JavaScript and SEO are disciplines that have complications and complexities individually and that, usually, departments work them separately in their strategies.

However, whoever wants to become a professional qualified to respond to the needs of the industry must master both fields, so we decided to make this post.

- What are the most important concepts of JavaScript and SEO?

- What role does JavaScript play in web pages concerning SEO?

- What are the SEO problems that happen with the misuse of JavaScript?

- What to do to facilitate the indexing of JavaScript pages in Google?

- What are the advantages of correctly configuring JavaScript elements for SEO?

What are the most important concepts of JavaScript and SEO?

The JavaScript programming language is one of the most used in the world — some of the best websites we have ever visited were built using it. But what are the fundamental concepts that unite both disciplines?

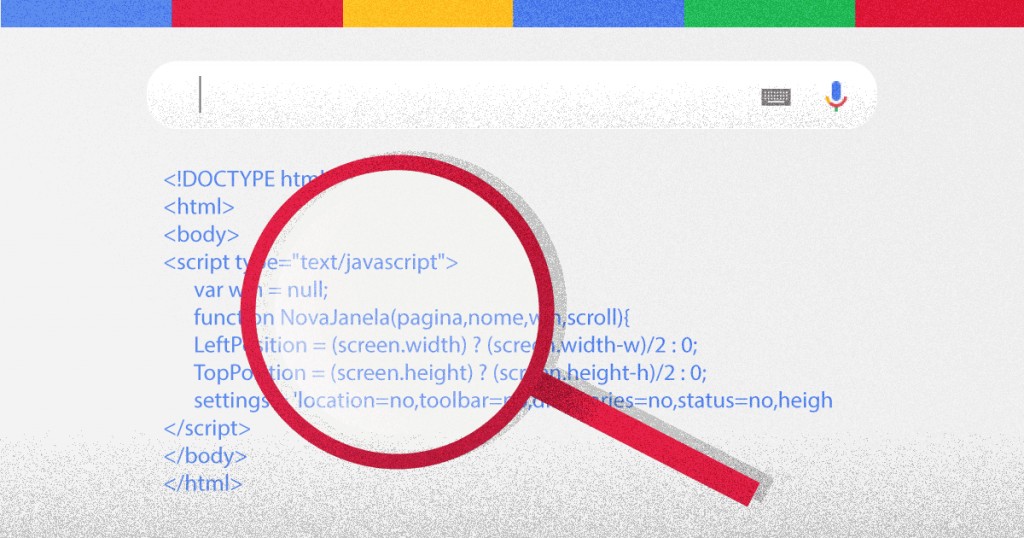

To properly understand JavaScript and SEO, the first thing to be clarified is that when it comes to positioning, search engines are not able to fully understand, assimilate, or process JavaScript source code.

However, there is a way to prepare a website so that when Google starts the crawling and indexing process, it can decipher it.

Indeed, the most popular search engine in the western world showed concern for this issue, and that’s how AJAX came about, which is basically a content updater.

AJAX allows applications to communicate with servers and indicates what’s new without having to crawl or refresh the entire page.

Now, how does it work?

First, the robot that processes JavaScript works in three steps:

- Tracking

- Processing

- Indexing

When identifying a URL that contains this language, the first task is to verify that the user has allowed the identification.

To do so, the robots.txt file is read, and, if it has been authorized, Google begins processing. Finally, after analyzing the HTML, it starts to be indexed.

All of this happens because JavaScript is not executed for servers, but for browsers. Therefore, search engines must assume the position of a browser to be able to capture or read the content.

What role does JavaScript play in web pages concerning SEO?

To answer that question, we must go back to AJAX, the acronym for Asynchronous JavaScript and XML.

This technique was developed for mobile devices and websites. Its function? Initially, make changes to the content without having to load all the HTML.

So, does it affect SEO? The answer is yes! AJAX “usually” — using the words of Google spokespersons — can render and index dynamic content, but it is not always like that. This ends up directly influencing search engine positioning.

Now, at this point, it is important to understand the limitations that Google has to process JavaScript. For example, most users use browsers like Chrome and Mozilla, among others.

Besides that, the Google robot does not use the latest version of these browsers, but Chrome 41 does the processing, a fact that can drastically affect tracking.

For this, there are Google’s own tools, such as the optimization test or the URL inspection tool of Search Console, in which you can view the features that are shown and the exceptions you can make to JavaScript or DOM (Document Object Model).

What are the SEO problems that happen with the misuse of JavaScript?

Although JavaScript helps to show the user dynamic rendering websites, full of interesting graphics, pleasant interfaces, among other things, several mistakes — that negatively influences SEO and, consequently, the potential of the site — can be easily made.

Here we show the most common mistakes that you can make.

1. Neglect HTML

If the most important information on the site is within the JavaScript code, the crawler may have very little information to do the proper processing when it comes to indexing for the first time.

Therefore, all fundamental data for the web must be created in HTML so that it can be quickly indexed by Google and other search engines.

2. Misused links

Any SEO professional knows the importance that internal links have for positioning.

This is because search engines and their crawlers recognize the connection between one page and another. This increases the user’s residence time.

For JavaScript and SEO, it is very important to make sure that all links are correctly established.

This means that anchor texts and HTML anchor tags that include the landing page URL in the href attribute must be used.

3. Accidentally prevent Google from indexing your JavaScript

This may be the most common of the three problems. As we already mentioned, Google cannot completely render JavaScript.

Because of that, many websites may be making the mistake of including “do not index” tags in HTML.

That is why when Google scans a website and reads HTML, it may find that tag and pass straight.

That prevents the Google robot from returning to run the JavaScript that is inside the source code, preventing it from showing correctly.

JavaScript remains an attractive and important aspect for web development, whether it is for brands, companies, e-commerces, or other purposes.

To prevent Googlebot and other crawlers from going straight, it is important to understand how they work and thus enhance SEO, favoring the positioning of web pages.

What to do to facilitate the indexing of JavaScript pages in Google?

Although it may seem like a summary of bad news so far, don’t worry!

Yes, it is possible to optimize a web page with JavaScript so that, not only it is displayed correctly, but also so that the Google robot can track, process, and index it to achieve the positioning on the SERPs that you are looking for.

Below, there are some tips to help you achieve it without dying in the attempt. Keep reading!

Optimize the URL structure

The URL is the first thing on the site that Googlebot crawls, so it’s very important. On web pages with JavaScript, it is highly recommended to use the pushState History API method, whose function is to update the URL in the address bar and allow pages with JavaScript to show themselves clean.

A clean URL consists of text that is very easy to understand by those who are not experts on the topic.

Thus, the URL is updated each time the user clicks on a piece of content.

Favor site latency

When the browser creates DOM — an interface that provides a standard set of objects to use and combine HTML, XHTML, and XML —, it can produce a very large file within HTML, causing a delay to load and consequently a significant delay for Googlebot.

When adding JavaScript to HTML directly, values are signed to not synchronize the less important elements of the page; therefore, it is possible to reduce the time to load, and JavaScript will not hinder the indexing process.

Test the site often

As we’ve already mentioned, it may be that JavaScript and SEO do not appear to be a problem for the crawling and indexing process at the beginning, but nothing can be said for sure.

Google can track and understand many things about JavaScript, but some are very difficult for its crawler. There are many tools to study and simulate the page loading and find errors.

You must find the contents that Google could have inconveniences with and that can negatively affect the positioning of your page.

What are the advantages of correctly configuring JavaScript elements for SEO?

Finally, it is important to note that if you want to have a dynamic website with JavaScript, it is essential to follow the steps recommended in this article and by other experts.

Having options is vital if we continue down this path. If JavaScript elements are well configured, Googlebot will have no problem crawling your content, starting HTML processing, and, in the end, indexing it.

However, you should consider the recommendations in this post. This is a territory that is still widely untapped by professionals, so Google has not yet created a unified system to find and read JavaScript well.

The world of SEO is full of changes and interesting paths that you can learn to achieve the dreamed position in search engines through well-produced and executed strategies.

But if your website is slow, nothing that will pay off. Here’s how your page speed can influence your sales performance.

![[WA] Ultimate Content Marketing Kit](https://rockcontent.com/wp-content/uploads/2022/08/Content-Marketing-Kit-750x200px.jpg)